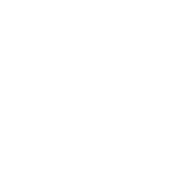

Harrison.rad.1 is Open: Test, Challenge and Explore Our Radiology Foundation Model Today

Experience Harrison.rad.1

Explore what’s possible when foundation models are purpose-built for radiology.

With increasing challenges from rising imaging volumes, workforce shortages and growing complexity of medical images, specialised capabilities from foundation models have the potential to accelerate development of tools to scale global healthcare.

Dr. Jarrel Seah, Harrison.ai Chief Medical & AI Officer, poses a question at Launch Day 2025:

“What if, instead of building individual algorithms for every finding, every X-ray modality, every clinical question, we built one foundation model that could understand all of these?”

That model is Harrison.rad.1.

Watch this exclusive Launch Day 2025 clip, as Dr. Seah walks through Harrison.rad.1’s performance in real-world challenges, and demonstrates the radiology LLM in action.

Disclaimer: Harrison.rad.1 is available for research use only. It is not intended for clinical use, which includes directly or indirectly in the diagnosis of disease or other conditions, or in the cure, mitigation, monitoring, treatment, or prevention of disease.

Demo Harrison.rad.1 at RSNA

Putting Harrison.rad.1 to the Test

“Foundation models need to be tested, not just promised,” Dr. Jarrel Seah highlighted.

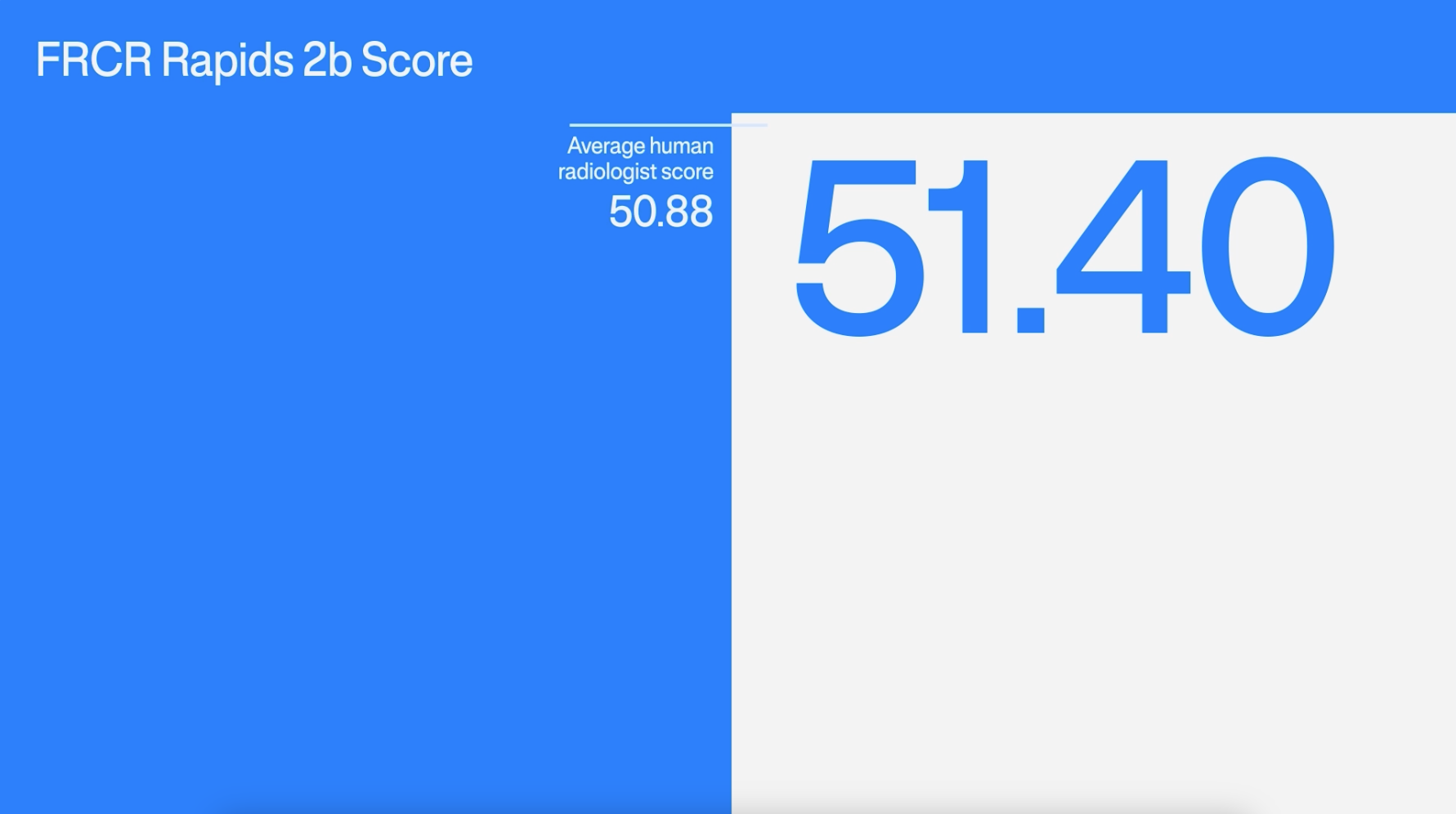

When we introduced it, we benchmarked it to some common industry standards. Harrison.rad.1 achieved a score of 51.40 on the FRCR 2B Rapids exam, the same credentialing exam taken by radiologists in the UK. Jarrel noted, “That exceeds the average performance of human radiologists retaking that exam within a year of passing.”

But real-world results matter most.

In May 2025, Mass General Brigham AI Arena and the American College of Radiology ran an independent challenge at the ACR Annual Meeting.

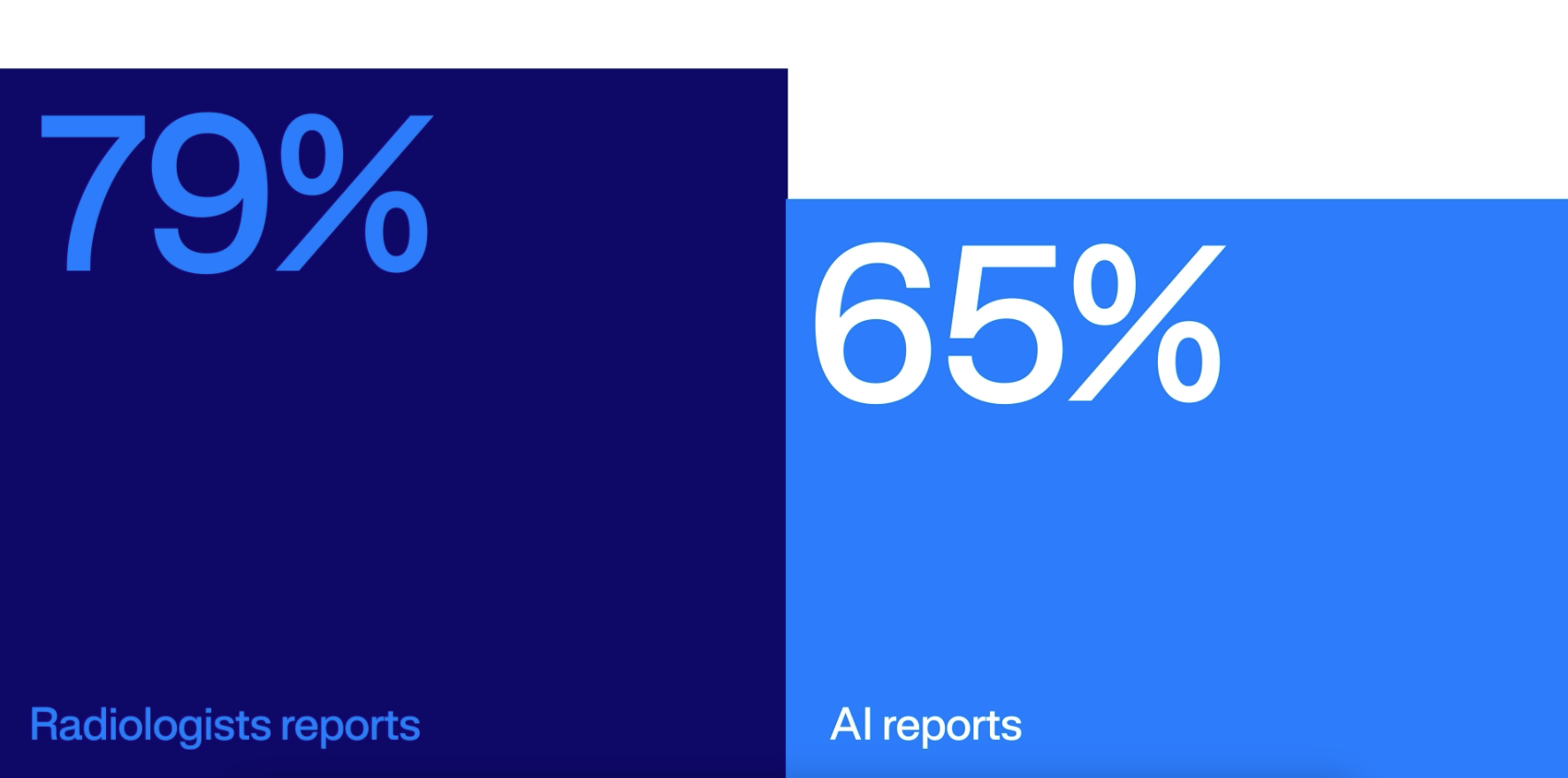

The question: could board-certified radiologists tell the difference between AI-generated and human-written reports?

- 113 radiologist

- 2,840 blinded evaluations

The results: reports powered by Harrison.rad.1 achieved a 65.4% acceptability rate, compared to 79.6% for radiologist-written reports.

Reflecting on the real-world challenge, Jarrel noted, “Mass General Brigham AI Arena said that these results show that draft reporting AI is improving at breakneck speed and is closer than ever to meaningfully enhancing radiologist efficiency.”

Why Harrison.rad.1 Performs So Well

The key lies in the data. Harrison.rad.1 is built on millions of DICOM images, radiologist annotations, and structured reports spanning all X-ray modalities.

Jarrel explained, “Why does Harrison.rad.1 perform at this level? Well, it comes down to comprehensive training data. This model leverages the same high-quality radiology data sets that power our Harrison.ai clinical decision support tools.”

“The most comprehensive detection algorithms on the market are now powering, the most promising foundation model for radiology reporting. And that’s not a coincidence,” Jarrel added.

See It in Action: Collaborate, Validate, Innovate

“We believe the field advances fastest when researchers can validate, test, and push these models forward,” Jarrel explained. That’s why Harrison.rad.1 is now available for:

- Benchmarking and validation studies

- Clinical trials and workflow integration testing

- Safety and reliability research

“If you’re working on radiology AI benchmarks, multimodal foundation models, or clinical validation studies, we want to work with you,” Jarrel added.

Harrison.rad.1 is available today. “Try it, test it, challenge it. Help us make it better,” said Jarrel.

Book a demo with our team at RSNA to see Harrison.rad.1 in action.

Experience Harrison.rad.1

Explore what’s possible when foundation models are purpose-built for radiology.